Ethics and Inclusion

|

Much of the focus of Inclusive Engineering has a huge crossover with the discussion of engineering ethics. Ensuring that we produce engineering outputs and outcomes which are inclusive and accessible to all; which acknowledge the need for equality; which avoid built in biases; which acknowledge the social justice requirements of our engineering solutions; which rely on our values as well as our principles; and which leave no-one behind, are all principles that can be seen as both inclusive and ethical.

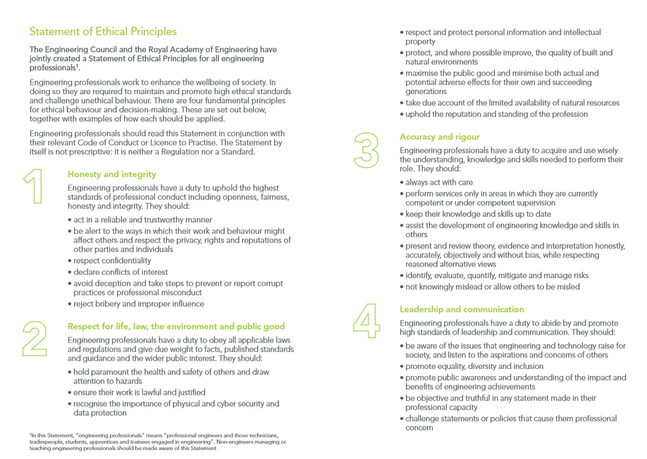

As part of the Statement of Ethical Principles 2017, all registered Professional Engineers have a duty to comply with the guidance issued jointly by the Engineering Council and Royal Academy of Engineering. The Code of Conduct has four principles by which we are bound to abide, as professional engineers, and these are the requirements for:

Within the Leadership and Commuication principle, engineering professionals should: • be aware of the issues that engineering and technology raise for society, and listen to the aspirations and concerns of others • promote equality, diversity and inclusion • promote public awareness and understanding of the impact and benefits of engineering achievements • be objective and truthful in any statement made in their professional capacity • challenge statements or policies that cause them professional concern Guidance on what it means to 'promote equality, diversity and inclusion' as a Professional Engineer will be published by the Engineering Council in due course. |

Inclusion in UK-SPECUK SPEC is the Standard for Professional Engineering Competence which sets out the competence and commitment required for registration as a Professional engineer.

The revised fourth edition of UK-SPEC was published on 31 August 2020 and will be implemented by 31 December 2021. It contains a new requirement for Inclusive Design along with the requirement to demonstrate personal and social skills and awareness of diverity and inclusion issues. Read more. |

Ethics of Digital Technology

Ethics of Digital TechnologyIn addition to the four ethical principles described in the Statement of Ethical Principles, it is worth expanding further on the values and ethical principles that need to be considered when developing new digital technology that requires ethical principles to be effectively hardwired or programmed in to the technology during development. This relates to artificial intelligence systems, the use of big data, machine learning, the internet of things, robotic systems and other similar digital technology. Ethical decisions will need to be made which relate to fairness, equality and social justice, and these decisions taken during development may be difficult to reverse, and even difficult to detect, during the use of this technology. For example, driverless vehicles will need to be pre-programmed to take certain actions in the event of a particular set of circumstances, depending on somebody’s view of what action is more acceptable. This could include making a decision in advance about whether a vehicle will preferentially collide with a pedestrian, or another road vehicle, in the event of an unavoidable accident.

Other ethical decisions might include whether it is acceptable to develop technology that allows deep fake videos to be produced, which may influence national security or the outcomes of elections. Whilst technically these fake videos are possible to produce, are they ethical? (Read more: Deepfake democracy: Here's how modern elections could be decided by fake news, World Economic Forum, 2020)

Or whether bias has been introduced in algorithmic decision making processes that determine who gets shortlisted for a mortgage, a job, or who is given a criminal sentence. (Read about the work of the Ada Lovelace Institute – an independent research institute and deliberative body with a mission to ensure data and AI work for people and society).

It is worth bearing in mind the quote from the film Jurassic Park: “Your scientists were so preoccupied with whether or not they could, they didn’t stop to think if they should.”

For further reading on the ethics of digital technology, see the Ethical OS Framework here (ethicalos.org) which describes eight risk zones to consider when developing digital technology:

Other ethical decisions might include whether it is acceptable to develop technology that allows deep fake videos to be produced, which may influence national security or the outcomes of elections. Whilst technically these fake videos are possible to produce, are they ethical? (Read more: Deepfake democracy: Here's how modern elections could be decided by fake news, World Economic Forum, 2020)

Or whether bias has been introduced in algorithmic decision making processes that determine who gets shortlisted for a mortgage, a job, or who is given a criminal sentence. (Read about the work of the Ada Lovelace Institute – an independent research institute and deliberative body with a mission to ensure data and AI work for people and society).

It is worth bearing in mind the quote from the film Jurassic Park: “Your scientists were so preoccupied with whether or not they could, they didn’t stop to think if they should.”

For further reading on the ethics of digital technology, see the Ethical OS Framework here (ethicalos.org) which describes eight risk zones to consider when developing digital technology:

- Truth, Disinformation, and Propaganda

- Addiction & the Dopamine Economy

- Economic & Asset Inequalities

- Machine Ethics & Algorithmic Biases

- Surveillance State

- Data Control & Monetization

- Implicit Trust & User Understanding

- Hateful & Criminal Actors?

- How could bad actors use your technology to subvert or attack the truth?

- How could someone use this technology to undermine trust in established social institutions, like media, medicine, democracy, science?

- What does “extreme” use, addiction or unhealthy engagement with your technology look like?

- Will people or communities who don’t have access to this technology suffer a setback compared to those who do? What does that setback look like? What new differences will there be between the “haves” and “have-nots” of this technology?

- Are you using machine learning and robots to create wealth, rather than human labor?

- Does this technology make use of deep data sets and machine learning? If so, are there gaps or historical biases in the data that might bias the technology?

- Have you seen instances of personal or individual bias enter into your product’s algorithms?

- How might a government or military body utilize this technology to increase its capacity to survey or otherwise infringe upon the rights of its citizens?

- Will the data your tech is generating have long-term consequences for the freedoms and reputation of individuals?

- If you profit from the use or sale of user data, do your users share in that profit? What options would you consider for giving users the right to share profits on their own data?

- Does your technology do anything your users don’t know about, or would probably be surprised to find out about? If so, why are you not sharing this information explicitly—and what kind of backlash might you face if users found out?

- How could someone use your technology to bully, stalk, or harass other people?

|

Equality Act 2010

The Equality Act 2010 is the UK legislation which legally protects people from discrimination in the workplace and in wider society. It replaced previous anti-discrimination laws with a single Act, making the law easier to understand and strengthening protection in some situations. It ensures consistency in what employers and employees need to do to make their workplaces a fair environment and comply with the law. The protected characteristics include age, disability, gender reassignment, race, religion or belief, sex, sexual orientation, marriage and civil partnership, and pregnancy and maternity. New measures from April 2017 stem from the introduction Equality Act 2010 (Gender Pay Gap Information) Regulations 2017. Read more from EqualEngineers here. Public Sector Equality Duty (PSED)

The public sector Equality Duty ( PSED ) requires public bodies, and others carrying out public functions, to have due regard to the need to eliminate discrimination, advance equality of opportunity and foster good relations between different people when carrying out their activities. Read more here. In the engineering sector, any organisation who provides services or products to the public must comply with the Public Sector Equality Duty. |

The characteristics that are protected by the Equality Act 2010 are:

Positive Action is when an employer takes steps to help or encourage certain groups of people with different needs, or who are disadvantaged in some way, access work or training. Positive action is lawful under the Equality Act.

Positive Discrimination is unlawful. Find out more. |