Inclusive Digital Technology

Take a look at the compilation of press articles on this subject below to find out how bias and discrimination is being built into our digital technology.

|

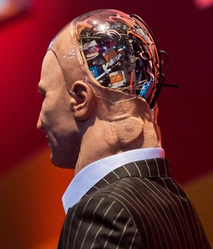

How we Build Fair AI Systems and Less-Biased Humans

Artificial intelligence (AI) offers enormous potential to transform our businesses, solve some of our toughest problems and inspire the world to a better future. But our AI systems are only as good as the data we put into them. As AI becomes increasingly ubiquitous in all aspects of our lives, ensuring we’re developing and training these systems with data that is fair, interpretable and unbiased is critical. Read more here from IBM Other links: How Biased AI can teach us to be better humas Gender Differences and the Implication for Protective Defence Equipment

In the Civilian American and European Surface Anthropometry Resource (CAESAR) project men and women were sampled in approximately equal numbers making it an ideal source for understanding gender differences. Stepwise Discriminant Analyses were done using the 97 one-dimensional measurements collected in CAESAR. The results indicate an unprecedented separation of male and female body shapes. All three regions had at least 98.5% accuracy in predicting gender with seven or fewer measurements. Some important body proportion differences between men and women will impact the fit and effectiveness of many types of protective apparel such as: flight suits, anti-g suits, cold-water immersion suits, chem.-bio protective suits, etc. While women are smaller than men on average for many body measurements, women are larger than men in some important aspects. For example, women are significantly larger than men in seated hip breadth in all three populations (26 mm larger on average) while at the same time significantly smaller than men for shoulder breadth (54 mm smaller on average). CAESAR also has the advantage of providing 3D models of all subjects. This capacity was also used to provide visual comparison of subjects which is helpful for understanding the differences and deriving solutions. Read More.

Links: Development and Implementation of the Aircrew Modified Equipment Leading to Increased Accommodation (AMELIA) Program How Search Engines Affect the Information we Find

'Pop a few letters into the text box of your chosen search engine and it’s likely that, before you finish typing, a handful of suggested phrases will have appeared. These autocomplete choices are based on millions of queries inputted by other users before you. They can seem helpful, saving you a few seconds of typing or even offering alternative ways of phrasing your query.' But is this biasing your thinking? Read more.

|

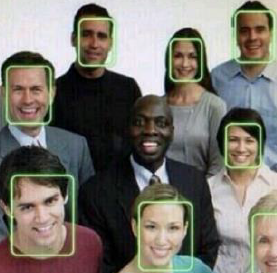

Facial Recognition is Accurate, If you're a White Guy!

Facial recognition technology is improving by leaps and bounds. Some commercial software can now tell the gender of a person in a photograph. When the person in the photo is a white man, the software is right 99 percent of the time.

But the darker the skin, the more errors arise.....Read more Why AI must resist our bad habit of stereotyping human speech?

Voice control gadgets – such as Amazon’s Alexa, Google’s Home or Apple’s Homepod – are becoming increasingly popular, but people should pause for thought about advances in machine learning that could lead to applications understanding different emotions in speech. Read More.

The Shirley Card

For many years, this "Shirley" card — named after the original model, who was an employee of Kodak — was used by photo labs to calibrate skin tones, shadows and light during the printing process. But what is you didn't look like Shirley? What if your skin was black? Read more.

A brief history of colour photography reveals an obvious but unsettling reality about human bias. Read More. Music Recommendation Algorithms

'These days, more and more people listen to music on streaming apps – in early 2020, 400 million people were subscribed to one. These platforms use algorithms to recommend music based on listening habits. The recommended songs might feature in new playlists or they might start to play automatically when another playlist has ended, but what the algorithms recommend is not always fair.' Read more.

|

Is this Soap Dispenser Racist?

Controversy as Facebook employee shares video of machine that only responds to white skin. Read more...

Turns Out Algorithms are Racist

Artificial intelligence is becoming a greater part of our daily lives, but the technologies can contain dangerous biases and assumptions - and we're only beginning to understand the consequences. Read More...

Why Facial Recognition Finds it Hard to Recognise Black Faces

Here's a perfect example of how hiring biases can affect the businesses that sell code and the consumers who buy it: "Every time a manufacturer releases a facial-recognition feature in a camera, almost always it can’t recognize black people. The cause of that is the people who are building these products are white people, and they’re testing it on themselves. They don’t think about it." Read More.

Biometric Technology

'Biometric technologies, from facial recognition to digital fingerprinting, have proliferated through society in recent years. Applied in an increasing number of contexts, the benefits they offer are counterbalanced by numerous ethical and societal concerns.' Read the Ada Lovelace Institute Citizens' Biometric Council Report here.

What can we learn from India's Aadhaar's programme. Read more. |

An artificial intelligence tool that has revolutionised the ability of computers to interpret everyday language has been shown to exhibit striking gender and racial biases.

The findings raise the spectre of existing social inequalities and prejudices being reinforced in new and unpredictable ways as an increasing number of decisions affecting our everyday lives are ceded to automatons.

In the past few years, the ability of programs such as Google Translate to interpret language has improved dramatically. These gains have been thanks to new machine learning techniques and the availability of vast amounts of online text data, on which the algorithms can be trained. Read More.

The findings raise the spectre of existing social inequalities and prejudices being reinforced in new and unpredictable ways as an increasing number of decisions affecting our everyday lives are ceded to automatons.

In the past few years, the ability of programs such as Google Translate to interpret language has improved dramatically. These gains have been thanks to new machine learning techniques and the availability of vast amounts of online text data, on which the algorithms can be trained. Read More.